In part 1, I illustrated how our decimal/Arabic number system is based on our ability to count up to nine with a single digit. After that, we add a digit (with a weighting of ten) and then continue to count the first digit from zero to nine again.

The best way to explain how computers count is to show an example. Let’s review again the way we count.

Three versions of 3

When someone shows three fingers to us (with one finger and a thumb folded down), we interpret the number three:

However, there are more ways of representing the same number:

The reason is that we assign the same value to each finger. Any three fingers being up signifies the number three.

All fingers are not created equal

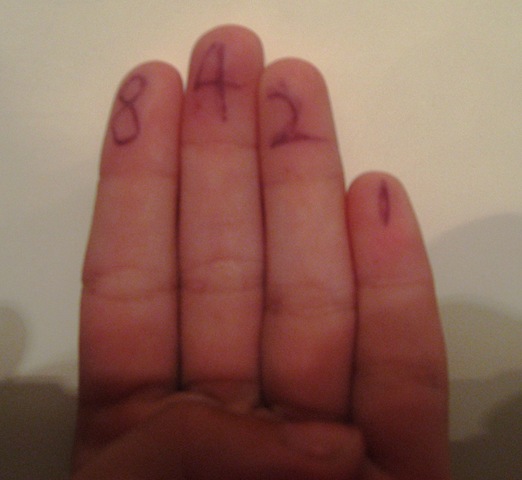

The analogous counting system with computers is to assign different values to each finger. Look closely at the following picture (click on it if you have to). You’ll notice the numbers eight, four, two, and one on the fingers:

When we see a hand with fingers up, we add up the numbers on the finger. Some examples:

Putting it in numbers

So, here’s how computers represent each number above: They assign a 1 or a 0 to each finger position. If the finger is up, it’s assigned a 1. If the finger is down, it’s assigned a 0.

So example A (fourteen) would be represented as 1110. Example B above (seven) would be represented as 0111. Example C would be represented as 1001.

Incidentally, the weighting of 8, 4, 2, 1 on each finger is called binary weighting: each finger has a value of twice the finger to the right of it. To complete the analogy, each finger represents one bit: one unit of binary information (a 1 or a 0). With four fingers/bits, we can represent any number between zero (all fingers down) to fifteen (Illustration A: all fingers up; 8+4+2+1).

to pralie: i’m a child (i’m 12 years old) and i can use binary easily…then again i am a freakin genius…my iq is 146

i actually understand quite well……now would you like to tell me how this fits in with computer programs and how the bits turn into information being fed to the computer, and how the computer interprets it and turns it into visual? (p.s. do not reply by email…it IS annonymous and will seriously mess up your computer if you even attempt to try to contact it….sorry its precautionary)

Actually, Pralie, I was taught Binary and Hexadecimal by my Dad at the age of 9 using a very similar method to this, and I’m not very good at maths.

Illustration B is actually showing the number 7

Thanks, sami. It’s fixed, now.

You FAIL! This would never help any children. You cannot teach children binary code unless they are freaking geniuses!

[…] the next post, I will explain how computers count with only two fingers. Technorati Tags: […]